Please notice: This article is more than 3 years old

Content, Source code or links may no longer be correct in the meantime.

August 31, 2020 | 09:30Reading-Time: ca. 10 Min

Working in your Homeoffice - Part II

Terminal Server - A look back

The second part of the home office series is about terminalservers. The concept is quite old and we can’t avoid a short excursion into the past:

It leads us back to the late 40s and 50s of the last century right to the beginnings of computer history. Computing time on such huge Von-Neumann-Computers1 was limited, very expensive and of course sometimes secret. Without going too much into details, I recommend the book by Kai Schlieter “Die Herrschaftsformel”2, which shows the developmental trajectories of computer science, defence technology, cybernetics and public relations.

However what is important for us is the understanding of the 1950s that only multi-user and multitasking systems were capable of being operated in an economically meaningful way. Different programs on one central mainframe computer were executed sequentially in short time frames so several users could work with their applications on their own input and output devices called terminals. This “time-sharing” principle3 forms the basis of every modern operating system.

With the introduction of the microchip in 19714, but no later than with the explosive growth of home and personal computers in the 1970s and early 1980s, the paradigm shifted to single-user mode5 computers. Operating systems like CP/M from 19746 or its threefold copied clone MSDOS from 19817 are the better known protagonists of this devolopment. My very first PC from 1988, a Schneider EURO-PC with MSDOS 3.3 originates from exactly this time.

It were those low-cost, single-user-mode devices that, along with graphical user interfaces, found their way into mainstream and enterprise environments. And as in the 50s, everyone knew: only with shared multi-users and above all multitasking systems serious and economically viable business operations were possible. It is a fact that a computer usually spends most of its time in idle8.

This is why the development of the X Window System9, an abstraction layer for transmitting graphical screen contents and control commands to local and remote terminals, began in the Unix world starting in 1984. Unlike before this also can be carried out over a network. A small start-up called Citrus copied and optimised this principle to MSDOS-based systems from 1989 on. The Independent Computing Architecture, the ICA protocol10 was born. A short time later this company was renamed to the current brand name Citrix11. Microsoft quickly licensed this technology, integrated it as Terminal Services12 in its Windows NT product family and called it Remote Desktop Protocol13.

A matter of perspective

Citrix and Microsoft jointly share the market for Windows-based terminal servers. For a long time, Citrix was considered to have the leaner and higher performance protocol and benefited from its technological leadership. The “seamless” window mode and smooth integration with local apps was a knockout factor. However, by 2016 at the latest, Microsoft has caught up with Citrix.

Citrix also achieved a technological lead in the area of virtualisation ahead of Microsoft. Again, in my opinion, Microsoft has caught up. But the question of virtualisation is another issue that does not arise here.

I cannot say which side is better. It is a matter of perspective and where someone with it’s network comes from. Anyway, such A/B questions often tend to be settled in an evidence-free way like questions of faith and rarely address specific underlying problems. For instance, besides Microsoft and Citrix there is also Thinstuff 14. My personal preference when it comes down to segmenting older applications on even older server systems quickly, making them accessible as terminal servers.

The better approach should be: What exactly should a terminal server do? Every time I see complete desktops inside terminals with all applications, web browsers and email usage, I ask myself what kind of thinking is behind this in terms of information security. Usually these systems are made available to everyone on the internet, without port-nocking15, GeoIP-blocking16 or IDS/IPS systems with Snort rules17 attached in front of them.

Recently, Citrix had been struggling with an unpatched vulnerability which made all systems that were accessible from the Internet instantly attackable. Even the BSI had to issue a warning18, since more than 5000 companies in Germany alone were affected. A scan with this nice script19 revealed dozens of unpatched systems in Shodan20 half a year later in July 2020.

At Microsoft, the security situation is by no means better. It was and still is the preferred gateway for hackers21. In fact, when the “Bluekeep” vulnerability was discovered last year, which was a fundamental design flaw in the implementation, Microsoft had to patch even the long-unsupported Windows versions XP and 2003 servers22. However, even without Bluekeep, FBI warnings were almost part of a recurring ritual23.

Let us keep it on record:

- Without additional protective mechanisms, terminal servers that are accessible from the Internet carry a considerable potential of risk.

- Both Citrix and Microsoft terminalservers are proprietary black boxes. No evidence-based conclusions can be made about their security. Both of them operate on the principle of “security by obscurity”24.

Solutions

If technologies or systems carry security risks inherently, the question arises what risk-reducing measures may help to bring them down to an acceptable level. This is only a sample of the possibilities:

- Multi-factor authentication e.g. TOTP

- Managed SSO25 with intrusion detection and heuristics

- Isolation by e.g. avoiding AD integration

- Use of IDS/IPS systems

- Restriction to fixed IPs e.g. with VPNs

- Use of controlled transition points (gateways or proxies)

- Application-specific functions and security concepts

Terminalserver - Looking ahead

A remote home office workstation can connect to a terminalserver over the Internet in basically two ways:

- with a proprietary client software

- in standard web browser

The much more flexible concept is the integration into a web browser with common internet technologies. This enables third-party systems not managed by an in-house IT department to access any resources. Those who have made their infrastructure vendor-independent in recent years and prepared themselves for the so-called bring-your-own-device principle26 are today in a much better situation when integrating home office workplaces.

The fact that by 2020 too many businesses and their HR departments still do not understand how questions about home office, working environment and operating system become hard recruitment factors for highly skilled employees is something I consider particularly depressing. Ultimately, nobody would think about hiring operators of excavators just to press shovels into their hands to dig a pit.

Everyone should work in exactly the environment they are most familiar with and can do their job efficiently.

To me, terminalservers with free HTTPS gateways are an indispensable part of a sustainable development.

Apache Guacamole

True, guacamole27 is a mouth-breaker. But as free software under the patronage of the Apache Foundation, it provides an all the better gateway for RDP, VNC and SSH connections via HTTPS. I would like to present how this solution has helped a medium-sized company to connect its employees in corona lockdown with their desktop workstations in the office.

And this is meant quite literally. Every Windows desktop workstation can be converted into a terminalserver with just one mouse click. A user connected in that way continues to work with his usual work environment and profile settings. Guacamole acts as a gateway between the two worlds by converting the RDP signal on port 3389 from the internal network into a TLS encrypted HTTPS on port 443.

The user only needs a standard web browser in the home office, if necessary on the private PC. No adjustments, plug-ins or additional software client installation are required. When integrated as an external application into a Nextcloud28, an employee finds everything in a familiar and, above all, uniform interface. Calendar, emails and the office desktop in different browser tabs are not only technically elegant but also handy for switching back and forth.

Guacamole can authenticate to OpenID, SAML or, if you prefer, LDAP. For security reasons I explicitly do not recommend LDAP. I have deliberately chosen the internal database-supported authentication, as I assume, that sooner or later credentials will be lost, especially when used in the internet.

What Microsoft can only do awkwardly , undocumented and proprietary through Azure-Cloud29 far beyond any internet standards for its RDP/RDS, Guacamole offers by default: A TOTP multi-factor authentication. Standard-compliant and secure in accordance with RFC 623830. Those who already use a Nextcloud with TOTP and the appropriate FreeOTP31 app on their mobiles will immediately find their way around. From an administrative point of view, the ready-to-use jail in Fail2Ban32 and also the straightforward reverse proxy configuration33 are very welcome.

In terms of functionality all this may be exactly what Microsoft does offer with its HTML5 RD Web Bundle. Apart from the crude multi-factor authentication beyond all internet standards. However, the fundamental flaw with Microsoft is the mandatory integration on a server integrated in AD with IIS. In my humble opinion a complete misconception! What security gain does an upstream system like this offer if an attacker, when overcoming the web server, has put his hand on exactly what he wanted? A tool like mimikatz34 doesn’t carep about where it generates its “Golden Ticket” as long as it is running on an AD member computer.

Linux terminal server

A blog post on terminalservers without having shown how easy it is to set up a terminal server under Debian? For any desired number of users and without license costs or pitfalls? No, this is not possible and therefore I will show a short manual at the end. As a challenge and encouragement to try it out for yourself. It is also perfect as a spare system or as a preparation for future migrations. Anything from a Raspi4 to a powerful server can be taken as hardware, just depending on how many users are supposed to work on that system simultaneously. Of course, this host should have a fixed and accessible IP assignment or DNS name for the network.

Perform a standard Debian server installation without a graphical interface and with SSH as the only selected installation option.

Install a minimal GNOME shell with some essential desktop tools:

# apt install file-roller gthumb seahorse gnome-core gnome-dictionary

ffmpeg gnupg hunspell-de-de firefox-esr-l10n-de webext-ublock-origin

cifs-utils ssl-cert ca-certificates apt-transport-https

- Install xrdp30. This is the software that converts an X11 session to RDP and makes it more transmittable over a network thanks to compression and caching:

# apt install xrdp

- Adjust Polkit so that a user with his or her specific display is not prompted for root privileges every time. This is done by creating a new rule file with the text editor of your choice:

# nano /etc/polkit-1/localauthority.conf.d/02-allow-colord.conf

with this content:

polkit.addRule(function(action, subject) {

if ((action.id == "org.freedesktop.color-manager.create-device" ||

action.id == "org.freedesktop.color-manager.create-profile" ||

action.id == "org.freedesktop.color-manager.delete-device" ||

action.id == "org.freedesktop.color-manager.delete-profile" ||

action.id == "org.freedesktop.color-manager.modify-device" ||

action.id == "org.freedesktop.color-manager.modify-profile")

&& subject.isInGroup("{group}")

{

return polkit.Result.YES;

}

});

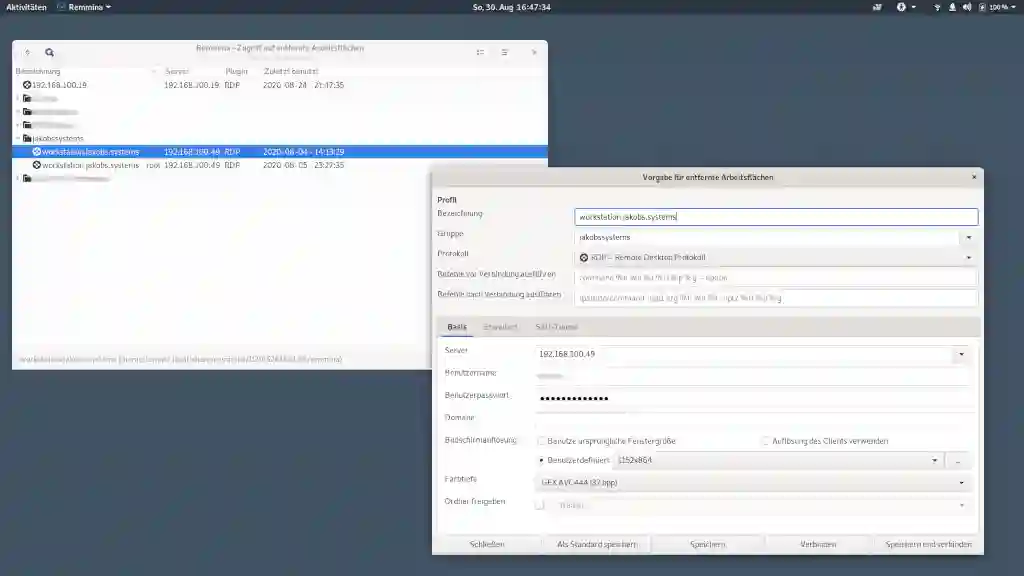

Now the only thing missing are users for testing. With “adduser” simply create a group of users and connect with them from other computers via RDP on port 3389. On Windows you might use mstsc.exe for this. On Linux I prefer to use Remmina35, because it provides a neat connection list.

Much like a Windows terminalserver, this Linux terminalserver may be run behind a guacamole. In contrast to Windows hosts, the RDP protocol can also be tunneled over SSH securely.

Again, it is necessary to balance out the risks.

The next and third part of this blog series explains in detail how.

You are welcome,

Tomas Jakobs

https://www.westendverlag.de/buch/die-herrschaftsformel-2/ ↩︎

https://en.wikipedia.org/wiki/Time-sharing_system_evolution ↩︎

🚫 https://www.computerhistory.org/siliconengine/microprocessor-integrates-cpu-function-onto-a-single-chip/ ↩︎

https://en.wikipedia.org/wiki/Independent_Computing_Architecture ↩︎

https://en.wikipedia.org/wiki/Citrix_Systems#Early_history ↩︎

https://news.microsoft.com/1998/06/16/microsoft-releases-windows-nt-server-4-0-terminal-server-edition/ ↩︎

🚫 https://www.bsi.bund.de/DE/Presse/Pressemitteilungen/Presse2020/Citrix_Schwachstelle_160120.html ↩︎

https://www.heise.de/hintergrund/Remote-Desktop-RDP-Liebstes-Kind-der-Cybercrime-Szene-1-4-4700048.html ↩︎

https://docs.microsoft.com/en-us/azure/active-directory/authentication/concept-mfa-howitworks ↩︎

🚫 https://guacamole.apache.org/doc/gug/proxying-guacamole.html ↩︎